Posted on 17 June 2020, updated on 12 May 2023.

Launched in 2013, Docker represents a real shift in Software Development. Its mantra: « Build Once, Run Anywhere ». Add a Dockerfile to your app and it becomes a package, a container, that you can ship seemingly anywhere, as long as you have Docker installed. Along with other technologies such as Kubernetes, it enabled the revolution of Cloud Computing, with stateless applications that can rescale on-demand and be deployed automatically through CI/CD.

So, is the case closed? Will we no longer need to think about where our application will run anymore? Well, that's not that simple, a whole part of the world is ignored: IoT, smartphones, and all these small gadgets don't run on the same hardware as our laptops and computers.

You might think it's not that much of an issue: websites and other services tend to run on classic Linux servers. However, there are many situations where using a bulky server is not an option :

- More and more IoT objects need to connect to the Internet, and often run a Linux kernel. Using the web application we’ve already developed for a server would be ideal. It’s also true for old computers lying around, on which your new 64-bits app won’t run.

- Distributed computing at a large scale is a research subject that looks promising: any piece of hardware could be part of a resilient supercomputer. That is the idea behind fog and mist computing, using every small piece of IoT available.

- These IoT chips are more power-efficient, and they can help reduce the overall energy consumption and carbon footprint of our services. Amazon Web Services does propose to use special EC2 instances with this kind of small machine, reducing the energy consumption and therefore the bill.

- Last but not least, the market might change. Apple will announce a shift to its own ARM processor for its products, abandoning classic x86 CPUs.

Of course, many IoT platforms don’t have the resources to run containers, but there are real use cases where you have a powerful enough machine to run Docker, but still small enough to fit in your pocket, and be energy-efficient.

That's the case of the Raspberry Pi, a very small computer, used in many IoT and home-server projects. That's the platform we'll use for this post.

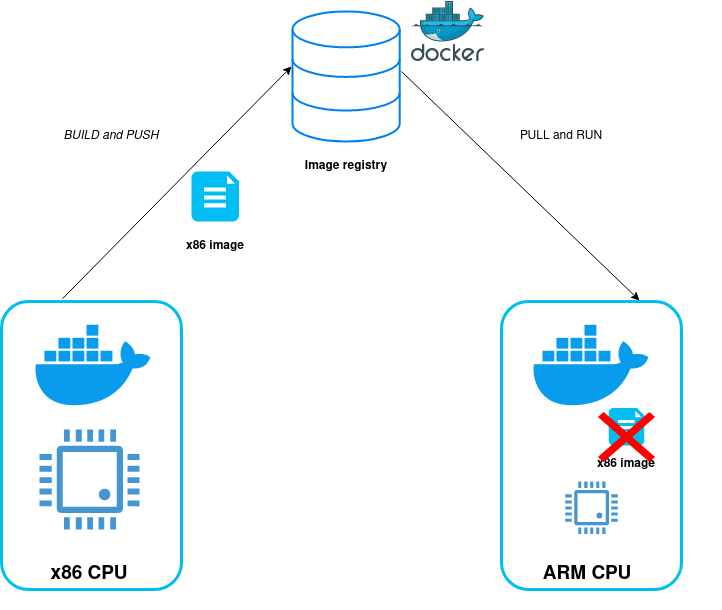

Let's remind the issue: Docker has been made for your everyday computer, which is an x86-64 CPU (more about that in a few paragraphs), but not for small elements of IoT such as the Raspberry Pi which runs on an ARM CPU.

The breaking example

Let's say you want to deploy your great Python application with docker. To keep things simple we'll create a simple Python app that just tells the date and time, using an external API (what an amazing SAAS concept). That's probably not very useful, but enough as an example for this post. Anyway, let's call it pytime!

If you don't see any code below, be sure to load the full version of the website, not the AMP !

That's all! We only use one dependency: requests which are used for the API call.

Now let's create a simple Dockerfile for it. We'll use the build caching system, installing dependencies first and then adding the source code.

Now, on your computer, you can build and push the app with docker. I assume you're already logged into your docker repository (if not, simply docker login for the default DockerHub).

$ docker build -t padok/pytime:v1.0

$ docker push padok/pytime:v1.0

It would be better to deploy it on a proper infrastructure such as Kubernetes, using Helm charts for deployments, but let's keep it simple. Let's say we ssh into our server and run the app directly with Docker.

However, here, our server is a Raspberry Pi v4, which runs on an ARM CPU (more on that later).

$ ssh pi@raspberrypi

$ docker run padok/pytime:v1.0

Unable to find image 'padok/pytime:v1.0' locally

v1.0: Pulling from padok/pytime

...

Status: Downloaded newer image for padok/pytime:v1.0

standard_init_linux.go:211: exec user process caused "exec format error"

Well, that didn't go well... On your computer or a classic server, you shouldn't encounter any issues, however on an ARM processor, for example on a Raspberry Pi, you will encounter a strange error message.

standard_init_linux.go:211: exec user process caused "exec format error"

The error is not very explicit, but with some research, you'll understand that the instructions in the Docker image are just not supported by the ARM machine. That would be the case for many IoT projects. That's not what I was expecting for such a simple application. Does Docker lie when it proclaims "Build Once, Run Anywhere!"? Well, it is a bit more complicated, let's dive in.

About CPU architectures

Before reviewing some Docker features, it is important to understand CPU architectures, also named instruction sets.

CPU can be classified into different categories, depending on how they are designed internally, and therefore how they communicate with the software. Your everyday computer or the server which serves this article will either run on an Intel or AMD CPU, the market leaders, which both use the same language as the computer: x86.

However, a smartphone or a small IoT object, which needs to be power-efficient, will use smaller CPUs, which use a different instruction set, such as ARM or RISC-V.

This language is an abstraction of the physical CPU for the software. This very low-level computer language is the bridge between our high-level human code (a = a + 1) and what happens in reality: fetch the value in a from memory, add 1, and save the new value in the variable's memory address.

For example, here is a translation for a C source code to Assembler for x86, which is very close to the actual instruction set language.

Transforming the intelligible code into Assembler is the compilation. For Docker, the analogy will be the build step. In the preceding example, we've built an app for the x86-64 architecture (the 64 bits flavor of x86, also called amd64), and we have tried to run it on an ARM CPU, it just cannot function properly!

But are we stuck with using Docker only on big and consuming x86 CPUs, letting behind a part of the IoT ecosystem? Luckily Docker handles multiple architectures natively.

How docker handles multiples architectures

On the ARM server (the Raspberry Pi), Docker seems to work fine.

$ docker run python:3.8.2 python --version

Python 3.8.2

In fact, if we had used the command docker build on this ARM machine, it would have created an image for this specific CPU architecture. The issue here is that we've created an x86-64 image and tried to use it on an ARM machine. It just cannot work.

By default, Docker builds and pulls the image for the platform it is running on. On DockerHub, you can explore which platforms are available for which version of the image. For example for python:3.8.2:

Using the digest column, you can specify the image you want to pull. For example, if you want to pull an arm/v7 (which is 32 bit) image specifically, you can just type get the specific version with:

$ docker pull python@sha256:14984d57dabd5ddb4789a65e5d64965473121c4ded5f8143161fbeb28713eebf

Even better, since the previous solution pins the image version in time, disabling security fixes, you can specify the architecture with a prefix. For example:

$ docker pull arm32v7/python:3.8.2

These solutions, either the automatic platform detection or the manual selection allow us to avoid relying on a complex tagging system such as myapp:1.3.2-arm.

Here we have a solution. Let's just build the app directly on the ARM server. Indeed, it will run correctly on the specific CPU. But we have new issues:

- First, we've totally broken our CI/CD process. No more image registry, you have to launch a new build on all your target devices every time a new version is released. You could install a CI/CD runner directly on the machine but it is limited by the following point.

- Secondly, ARM processors are often slow and quite limited in power. The compilation could strain resources from the main process and takes quite a long time.

We want to be able to build our app once, on our machine or a CI/CD solution, and forget about the hardware it will run on! Luckily, Docker has also a solution for us.

Multi-architecture builds with Buildx

Buildx is an experimental feature of Docker, so check that you've got at least Docker 19.03 with docker version. Then, you can enable buildx (and other experimental features) for the current shell:

$ export DOCKER_CLI_EXPERIMENTAL=enabled

Now you should be able to run

$ docker buildx ls

NAME/NODE DRIVER/ENDPOINT STATUS PLATFORMS

default * docker

default default running linux/amd64, linux/386

Here we can see that our current docker builder is able to construct images in two different architectures:

- amd64 which refers to the x86-64 instruction set, which is the 64-bit version of the mainstream x86 instruction set. That's the architecture your computer is probably running on. Since AMD first released the 64-bit version, they got the name.

- 386, often referred to as i386 (i as in Intel) is the legacy 32-bit version of the x86 instruction set.

With buildx you could compile a 32-bit version of your docker image for an old computer! And it can also works for our exotic ARM CPU.

However, we will need yet another tool for this: qemu, a cross-compilation framework. Cross-compilation is compiling software for an architecture B on a computer running on architecture A. For example, compiling for ARM on an amd64 platform.

That's exactly what we want! Using complicated Linux functionalities which I won't explain here (if you're interested, read this article), we can give buildx some qemu steroids!

$ docker run --rm --privileged multiarch/qemu-user-static --reset -p yes

It will only work for the current session. If you reboot you'll have to run this command again.

Now let's create a new builder with buildx, which will be able to use qemu abilities.

$ docker buildx create --name mybuilder --driver docker-container --use

Now let's display the available platforms I can build on. We need to bootstrap the builder, i.e. initialize it.

$ docker buildx inspect --bootstrap

Name: mybuilder

Driver: docker-container

Nodes:

Name: mybuilder0

Endpoint: unix:///var/run/docker.sock

Status: running

Platforms: linux/amd64, linux/arm64, linux/riscv64, linux/ppc64le, linux/s390x, linux/386, linux/arm/v7, linux/arm/v6

We have many more platforms available. If you check on DockerHub, you'll see that there is no base image for riscv64, we'll have to remove it from the list. To speed up the process, we'll only build for x86 and ARM here

Then the CLI is the same as docker build, with the addition of the --platform argument which takes the list of the platforms you want to build to. You can also specify --push to directly release your image on a registry (be sure you're logged in!). Here I'll push my images into DockerHub.

$ docker buildx build --platform linux/amd64,linux/arm64,linux/ppc64le,linux/s390x,linux/386,linux/arm/v7,linux/arm/v6 -t padok/pytime:v2.0 . --push

...

=> => pushing layers 10.3s

=> => pushing manifest for docker.io/dixneuf19/pytime:v2.0 8.6s

If everything goes alright, you should be able to see all your images on the DockerHub.

Now on the Raspberry Pi into which we can ssh, we can successfully run the app:

docker run padok/myapp:v2.0

If your app is quite big or uses some modules such as cryptography in Python, you'll notice that the build can be quite long (from 30 sec to 15 min!). That's a common issue with cross-compilation.

But it is still often better than building directly on the target device. Indeed, IoT objects and small computers don’t have many resources to build an app or a docker image efficiently.

That's fine and all but I feel we’re missing something... Having to build and release the app from his own computer shouldn't be the norm, and I would bet that Windows users are currently struggling to follow this tutorial.

Let's move all this logic into the cloud, with a CI/CD workflow!

CI/CD: Github Action and Buildx

For this example, we choose Github Actions because it's very simple to use and share, but you could adapt this tutorial to any CI/CD solutions such as GitlabCI, Travis, CircleCI, or Jenkins. If you don't know anything about GitHub Actions, check out our article about it!

The main idea around Github Actions is to create reusable components and share them. There is an amazing action Buildx action which exactly fits our needs: it creates a builder, with qemu already configured.

Then we can add some steps for the build, DockerHub login, and finally the release of the app. The file is stored in .github/workflows and we are good to go!

We can check if our action was successful:

Now our application is automatically available on all these platforms. Be sure to check if the base image (here python3.8.2) for the target platform does exist on DockerHub as shown above.

If you were attentive enough, you might have noticed that we don't build Windows images, which does exist sometimes on DockerHub. Luckily, after a few patches, Windows embarks a Linux core and therefore supports Linux containers.

Anyway, Windows is seldom the build target, except for some specific .NET applications. We’ve got an article about using Docker on Windows.

Not that we could also have used multiple runners, each running on different hardware, and therefore we would have avoided cross-compilation. However, it requires much more effort in terms of infrastructure.

In this article, we demonstrated how Docker is able to build applications for several CPU architectures, which is essential for the support of the growing IoT ecosystem. While still experimental, Docker Buildx is a game-changer for delivering containers to multiple platforms, and is already easily integrable into our CI/CD process.

All the code, including the CI/CD process, is available in this Github repository!